What is Embedded AI (EAI)?

Embedded AI, also known as Embedded Artificial Intelligence (EAI), is a general-purpose framework system for AI functions. It is built into network devices and provides common model management, data obtaining, and data preprocessing functions for AI algorithm-based functions for these devices. In addition, it supports the function to send inference results to AI algorithm-based functions. This fully utilizes the sample data and computing capabilities of devices, while offering advantages such as lower data transmission costs, as well as ensured data security and real-time inferencing and decision-making.

Why Do We Need EAI?

The fourth industrial revolution, led by AI, has arrived. AI is profoundly changing the human social life and the world at an unprecedented pace. AI technologies can create value for network devices across multiple aspects, such as parameter optimization, application identification, security, and fault diagnosis.

The three core elements of AI are algorithm, computing power, and data. If each AI function supported by a device maintains these three elements, the AI algorithm-based functions require the device to provide a large amount of sample data and high-performance computing capabilities, severely impacting the normal running of the device.

The EAI system provides a complete general-purpose framework for AI functions, which can subscribe to services of the EAI system. Once an AI function has subscribed to such services, the system performs inference on real-time data related to the AI function using the AI algorithm, enabling the AI function to be implemented based on the inference result. The analysis and inference of locally-generated data offer advantages such as lower data transmission costs, as well as ensured data security and real-time inference and decision-making.

How Does EAI Work?

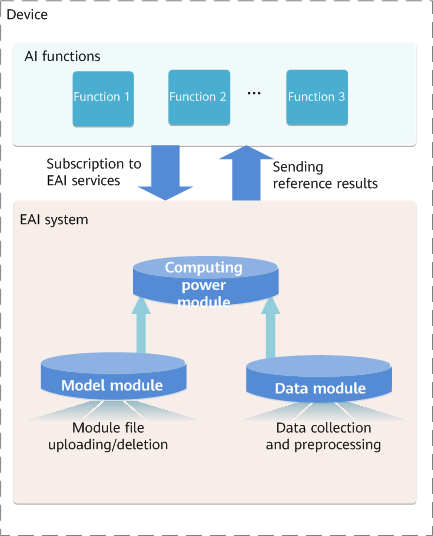

The EAI system consists of the following three modules:

- Model module: is also known as the algorithm module, which integrates multiple AI algorithms. The model module manages multiple model files. Each file contains one or more models, and different models correspond to different AI algorithms. Users can load and delete model files to manage AI algorithms used by the EAI system.

- Data module: obtains and preprocesses data and manages the massive data required by all AI functions on devices.

- Computing power module: performs inference based on the algorithms from the model module and the data from the data module. The inference result is sent to the AI functions supported by the device. These functions are then used to analyze the inference result, generate specific configurations, and deliver these configurations to the device.

Implementation mechanism of the EAI system

The preceding figure shows the implementation mechanism of the EAI system.

- The data module of the EAI system collects massive data related to each AI function on the device, preprocesses the data, and uses the preprocessed data as input for the computing power module.

- Users can load or delete different model files in the EAI system. These model files contain the trained models that are applicable to each AI function.

- The AI functions on the device subscribe to services of the EAI system. This process does not need to be configured by users, and subscription is complete once an AI function is enabled. After the AI function subscribes to the EAI service, the EAI system protects the subscribed model in the model file to ensure that the latest version of the model cannot be deleted and is used as the input of the computing power module.

- The computing module performs inference based on algorithms from the model module and data from the data module, and sends the inference result to the enabled AI function.

- The AI function delivers specific configurations based on the inference result of the EAI system.

Application of EAI

The Artificial Intelligence Explicit Congestion Notification (AI ECN) function intelligently adjusts ECN thresholds of lossless queues based on the traffic model on the live network. This function ensures low delay and high throughput with zero packet loss, achieving optimal performance for lossless services.

The traditional static ECN function requires manual configuration of parameters such as the ECN threshold and ECN marking probability. For lossless services that require zero packet loss, the ECN threshold cannot adapt to the ever-changing buffer space in the queue. The AI ECN subscribes to the EAI function, performs AI training based on the traffic model on the live network, predicts network traffic changes, and infers the optimal ECN threshold in a timely manner. In addition, the ECN threshold can be adjusted in real time based on live-network traffic changes, allowing accurate management and control of the lossless queue buffer and ensuring the optimal performance across the entire network.

After the AI ECN function is enabled on a device, the AI ECN component automatically subscribes to the services of the EAI system. After receiving the pushed traffic status information, the AI ECN component intelligently determines the current traffic model based on the inference result of the EAI system. If the traffic model has been trained in the EAI system, the AI ECN component calculates the ECN threshold that matches the current network status based on the optimal reference result of the EAI system, delivers the optimal ECN threshold to the device, and adjusts the ECN threshold for lossless queues. For the newly obtained traffic status, the preceding operations are repeated to ensure low latency and high throughput of lossless queues. As a result, the optimal lossless service performance can be attained in different traffic scenarios.

- Author: Feng Yuanyuan

- Updated on: 2024-02-27

- Views: 18723

- Average rating: