What Is QoS?

QoS improves network resource utilization and allows different types of traffic to compete for network resources based on their priorities, so that voice, video, and important data applications are preferentially processed on network devices.

Importance of QoS

Services on the IP network can be classified into real-time and non-real-time services. Real-time services, such as voice services, occupy fixed bandwidth and are sensitive to network quality changes. Therefore, they have high requirements on network stability. The bandwidth occupied by non-real-time services is unpredictable, and burst traffic often occurs. Burst traffic will deteriorate network quality, cause network congestion, increase the forwarding delay, and even cause packet loss. As a result, service quality deteriorates or even services become unavailable.

Increasing network bandwidth is the best solution, but is costly compared to using a service quality guarantee policy that manages traffic congestion.

QoS is applicable to scenarios where traffic bursts occur and the quality of important services needs to be guaranteed. If service quality requirements are not met for a long time (for example, the service traffic volume exceeds the bandwidth limit for a long time), expand the network capacity or use dedicated devices to control services based on upper-layer applications.

In recent years, traffic of video applications have grown explosively. For enterprises, applications such as HD video conference and HD video surveillance also generate a large amount of HD video traffic on the network. Video traffic occupies more bandwidth than voice traffic. Especially, interactive video applications have high requirements on real-time performance. In addition, with the development of wireless networks, more and more users and enterprises use wireless terminals. The moving of wireless terminals results in more unpredictable traffic on the network. Therefore, the QoS solution design faces more challenges.

QoS Counters

The network quality is affected by the bandwidth of the transmission link, delay and jitter of packet transmission, as well as packet loss rate, which are known as key QoS counters.

Bandwidth

Bandwidth, also called throughput, refers to the maximum number of data bits transmitted between two ends within a specified period (1 second) or the average rate at which specific data flows are transmitted between two network nodes. Bandwidth is expressed in bit/s. There are two common concepts related to bandwidth: uplink rate and downlink rate. The uplink rate refers to the rate at which users send information to a network, and the downlink rate refers to the rate at which a network sends information to users. For example, the rate at which users upload files to a network is determined by the uplink rate, and the rate at which users download files is determined by the downlink rate.

Delay

Delay refers to the time required to transmit a packet or a group of packets from the transmit end to the receive end. It consists of the transmission delay and processing delay. Voice transmission is used as an example. A delay refers to the period from when words are spoken to when they are heard. Generally, people are insensitive to a delay of less than 100 ms. If a delay is the range of 100 ms to 300 ms, both parties of the call can sense slight pauses in the peer party's reply, which may seem annoying to both. If a delay is longer than 300 ms, both the speaker and responder obviously sense the delay and have to wait for responses. If the speaker cannot wait and repeats what has been said, voices overlap and the quality of the conversation deteriorates severely.

Jitter

If network congestion occurs, the delays of packets over the same connection are different. The jitter is used to describe the degree of delay change, that is, the time difference between the maximum delay and the minimum delay. Jitter is an important parameter for real-time transmission, especially for real-time services, such as voice and video, which are intolerant to the jitter because the jitter will cause voice or video interruptions. The jitter also affects protocol packet transmission. Some protocols send interactive packets at a fixed interval. If the jitter is too large, protocol flapping occurs. Jitter is prevalent on networks but generally does not affect service quality if it does not exceed a specific tolerance. The buffer can overcome the excessive jitter, but it will increase the delay.

Packet Loss Rate

The packet loss rate refers to the percentage of the number of packets lost during data transmission to the total number of packets sent. Slight packet loss does not affect services. For example, users are unaware of the loss of a bit or a packet in voice transmission. The loss of a bit or a packet in video transmission may cause the image on the screen to become garbled instantly, but the image can be restored quickly. TCP can be used to transmit data to handle slight packet loss because TCP allows the lost packets to be retransmitted. If severe packet loss does occur, the packet transmission efficiency is affected. QoS focuses on the packet loss rate. The packet loss rate must be controlled within a certain range during transmission.

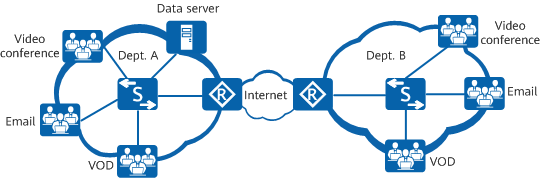

Application Scenarios of QoS

Take enterprise office as an example. In addition to the basic web browsing and email services, services such as Telnet-based device login, remote video conferences, real-time voice calls, FTP file upload and download, and video playback must also have their network quality guaranteed during busy hours. If services have varying network quality requirements, you can configure corresponding QoS functions or enable QoS only for some services to meet the requirements.

Network protocols and management protocols: such as OSPF and Telnet

These services require low delay and low packet loss rate, but do not require high bandwidth. To meet the requirements of such services, configure priority mapping to map the priority of the service packets into a higher CoS value so that the network device can preferentially forward the packets.

Real-time services: such as video conference and VoIP

Video conferences require high bandwidth, low delay, and low jitter. To meet the requirements of such services, configure traffic policing to provide high bandwidth for video packets and priority mapping to increase the priority of video packets.

VoIP refers to the real-time voice call over the IP network. It requires low packet loss, delay, and jitter. If these requirements cannot be met, both parties of a call will suffer from poor call quality. To resolve this problem, configure priority mapping so that voice packets take precedence over video packets and configure traffic policing to provide the maximum bandwidth for voice packets. This ensures that voice packets are preferentially forwarded in the case of network congestion.

- Heavy-traffic services: such as FTP, database backup, and file dump

Heavy-traffic services refer to network services in which a large amount of data is transmitted for a long time. Such services require a low packet loss rate. To meet the requirements of such services, configure traffic shaping to cache the service packets sent from an interface in the data buffer. This reduces packet loss upon congestion caused by burst traffic.

- Streaming media: such as online audio playback and video on demand (VOD)

Users can cache audio and video programs before playing them, reducing requirements on the network delay, packet loss, and jitter. To reduce the packet loss rate and delay of these services, configure priority mapping to increase the priority of the service packets.

- Common services: such as HTML web page browsing and email

Common services have no special requirements on the network. You do not need to deploy QoS for them.

Service Models

How are QoS indicators defined within proper ranges to improve network service quality? The answer lies in the QoS model. QoS is an overall solution, instead of being merely a single function. When two hosts on a network communicate with each other, traffic between them may traverse a large number of devices. QoS can guarantee E2E service quality only when all devices on the network use a unified QoS service model.

The following describes the three mainstream QoS models. Huawei switches, routers, firewalls, and WLAN devices support QoS based on Differentiated Services (DiffServ), which is the most commonly used.

Best-Effort

Best-Effort is the default service model for the Internet and applies to various network applications, such as FTP and email. It is the simplest service model, in which an application can send any number of packets at any time without notifying the network. The network then makes the best effort to transmit the packets but provides no guarantee of performance in terms of delay and reliability. The Best-Effort model is suitable for services that have low requirements for delay and packet loss rate.

Integrated Service (IntServ)

In the IntServ model, an application uses a signaling protocol to notify the network of its traffic parameters and apply for a specific level of QoS before sending packets. The network reserves resources for the application based on the traffic parameters. After the application receives an acknowledgement message and confirms that sufficient resources have been reserved, it starts to send packets within the range specified by the traffic parameters. The network maintains a state for each packet flow and performs QoS behaviors based on this state to guarantee application performance.

The IntServ model uses Resource Reservation Protocol (RSVP) for signaling. Resources such as bandwidth and priority are reserved on a known path, and each network element along the path must reserve required resources for data flows requiring QoS guarantee. Each network element checks whether sufficient resources can be reserved based on these RSVP messages. The path is available only when all involved network elements can provide sufficient resources.

DiffServ

DiffServ classifies packets on a network into multiple classes and provides differentiated processing for each class. In this way, when congestion occurs, classes with a higher priority are given preference. Packets of the same class are aggregated and sent as a whole to ensure the same delay, jitter, and packet loss rate.

In the DiffServ model, traffic classification and aggregation are completed on border nodes. A border node flexibly classifies packets based on a combination of different fields (such as the source and destination addresses, priority in the ToS field, and protocol type), and marks different classes of packets with appropriate priority values. Other nodes only need to identify these markings to allocate resources and control traffic.

Unlike the IntServ model, the DiffServ model does not require a signaling protocol. In this model, an application does not need to apply for network resources before sending packets. Instead, the application sets QoS parameters in the packets, through which the network can learn the QoS requirements of the application. The network provides differentiated services based on the QoS parameters of each data flow and does not need to maintain a state for each data flow. DiffServ takes full advantage of IP networks' flexibility and extensibility and transforms information in packets into per-hop behaviors (PHBs), greatly reducing signaling operations.

Mechanisms in the DiffServ Model

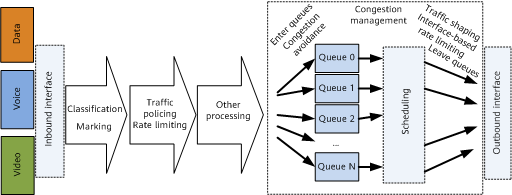

The DiffServ model involves the following QoS mechanisms:

Traffic classification and marking

Traffic classification and marking are prerequisites for implementing differentiated services. Traffic classification divides packets into different classes, and can be implemented using traffic classifiers configured using Modular QoS Command-Line Interface (MQC). Traffic marking sets different priorities for packets and can be implemented through priority mapping and re-marking. Packets carry different types of precedence field depending on the network type. For example, packets carry the 802.1p field in a VLAN network, the EXP field on an MPLS network, and the DSCP field on an IP network.

Traffic policing, traffic shaping, and interface-based rate limiting

Traffic policing and traffic shaping control the traffic rate within a bandwidth limit. Traffic policing drops excess traffic when the traffic rate exceeds the limit, whereas traffic shaping buffers excess traffic. Traffic policing and traffic shaping can be performed on an interface to implement interface-based rate limiting.

Congestion management and congestion avoidance

Congestion management buffers packets in queues upon network congestion and determines the forwarding order using a specific scheduling algorithm. Congestion avoidance monitors network resource usage and drops packets to mitigate network overloading when congestion worsens.

Traffic classification and marking are the basis of differentiated services. Traffic policing, traffic shaping, interface-based rate limiting, congestion management, and congestion avoidance control network traffic and resource allocation to implement differentiated services.

QoS service process

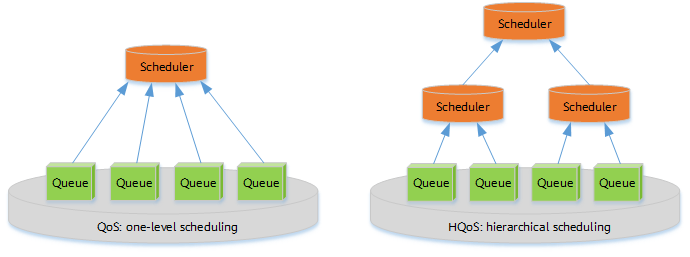

QoS vs. HQoS

- Traffic is scheduled based on interface bandwidth, allowing differentiation of traffic based on service levels. However, it is difficult to differentiate services based on users. Therefore, traditional QoS is typically applied to the core layer, instead of the access layer.

- Traditional QoS cannot manage or schedule traffic of multiple services for multiple users simultaneously.

Hierarchical Quality of Service (HQoS) has been introduced to address these issues by differentiating traffic of different users and scheduling traffic based on service priorities. HQoS uses multiple levels of queues to further differentiate service traffic, and provides uniform management and hierarchical scheduling for transmission objects such as users and services. It enables network devices to control internal resources, providing QoS guarantee for VIP users while reducing network construction costs.

Scheduling models of QoS and HQoS

- Author: Zhu Yue

- Updated on: 2021-11-24

- Views: 10482

- Average rating: