What Is an Intelligent Lossless Network?

In scenarios such as distributed storage, high-performance computing (HPC), and AI, RDMA over Converged Ethernet version 2 (RoCEv2) is used to reduce CPU processing workload and delay and improve application performance. Distributed high-performance applications use the N:1 incast traffic model. For Ethernet switches, incast traffic may cause instantaneous burst congestion or even packet loss in the internal queue buffer of a switch. As a result, the application delay increases and the throughput decreases, which lowers the performance of distributed applications.

An intelligent lossless network uses the AI-ready hardware architecture and iLossless algorithm — an AI-powered intelligent lossless algorithm — to achieve the maximum throughput and minimum latency without packet loss in AI, distributed storage, and HPC scenarios. This accelerates computing and storage efficiency and builds a converged network for future DCs.

What Are the Advantages of an Intelligent Lossless Network?

AlphaGo's victory in March 2016 was a major milestone in AI research, declaring that the Fourth Industrial Revolution characterized by AI is coming. More and more enterprises incorporate AI into their subsequent strategies for digital transformation. Against this backdrop, the focus of enterprise DCs in the AI era is shifting from fast service provisioning to efficient data processing. Computing, storage, and network are the three key elements of a DC, which promote each other. With the explosive growth of AI application data, heterogeneous computing based on general process units (GPUs) and AI chips is booming, and the computing performance has been improved by 600 times in the past five years. In the data storage field, the access performance of SSDs is 100 times higher than that of HDDs, and the performance of Non-Volatile Memory Express (NVMe) is 100 times higher than that of SSDs. The rapid development of computing and storage poses new requirements for data center networks: zero packet loss, high throughput, and low latency. In response to such requirements, intelligent lossless networks emerge.

The intelligent lossless network solution has the following visions:

- Fully converged Ethernet network

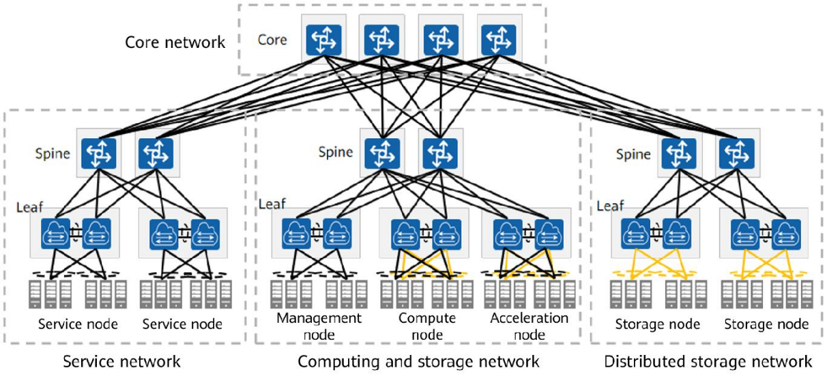

Leveraging RoCEv2, the intelligent lossless network aims to implement Ethernet-based unified management of the service network, computing network, and storage network. This solves the problem of multiple networks using multiple technologies in the traditional data center network in the IP and FC eras, and meets the low latency requirements of the data center storage network.

Fully converged Ethernet network

- Application acceleration

The intelligent lossless network provides intelligent lossless algorithms such as Convolutional Neural Network (CNN) and Deep Q-Network (DQN) to resolve network congestion and offload applications to the network, accelerating computing and storage applications.

- Autonomous driving network

The intelligent lossless network uses intelligent lossless algorithms such as CNN and DQN to automatically learn network parameters, and adjusts the network accordingly to achieve zero packet loss, maximum throughput, and minimum latency.

Key Technologies of the Intelligent Lossless Network Solution

Flow control

Flow control is a basic technology for ensuring zero packet loss on a network. It adjusts the data transmission rate of the traffic sender so that the traffic receiver can receive all packets, thereby preventing packet loss when the traffic receiving interface is congested.

Mainstream flow control technologies include:

- Priority-based Flow Control (PFC): is the most widely used flow control technology. When a PFC-enabled queue on a device is congested, the upstream device stops sending traffic in the queue, implementing zero packet loss.

- PFC storm control: is also called PFC deadlock detection. This technology is used to solve the problem of network traffic interruption caused by PFC storms.

- PFC deadlock free: This technology identifies cyclic buffer dependencies and eliminates the necessary conditions for generating them, resolving the PFC deadlock problem and enhancing network reliability.

Congestion control

Congestion control is a method of controlling the total amount of data entering a network to keep the network traffic at an acceptable level. The difference between congestion control and flow control is that flow control applies to the traffic receiver, whereas congestion control applies to networks. Congestion control requires the collaboration between the forwarding device, traffic sender, and traffic receiver, and uses congestion feedback mechanisms to adjust traffic on the entire network to mitigate congestion.

Mainstream congestion control technologies include:

- Explicit Congestion Notification (ECN): When congestion occurs on a network, ECN enables the traffic receiver to detect congestion on the network and notify the traffic sender of the congestion. After receiving a notification, the traffic sender reduces the packet sending rate. This prevents packet loss due to congestion and maximizes the network performance.

- AI ECN: Using the Intelligent Lossless (iLossless) algorithm, AI ECN enables the device to perform AI training based on the traffic model on the live network, predict network traffic changes and the optimal ECN thresholds in a timely manner, and adjust the ECN thresholds in real time based on traffic changes on the live network. In this way, the lossless queue buffer is accurately managed and controlled, ensuring the optimal performance across the entire network. In addition, AI ECN can be combined with queue scheduling to implement hybrid scheduling of TCP and RoCEv2 traffic on the network. This ensures lossless transmission of RoCEv2 traffic and achieves low latency and high throughput, achieving optimal performance of lossless services.

- Network-based Proactive Congestion Control (NPCC): NPCC is a proactive congestion control technology centering on network devices. It intelligently identifies the congestion status of ports on network devices, enables devices to proactively send Congestion Notification Packets (CNPs), and accurately controls the rate of RoCEv2 packets sent by the server. This maintains a constantly proper transmission rate (both in scenarios where congestion occurs or is relieved), achieving low latency and high throughput of RoCEv2 services in long-distance scenarios such as Data Center Interconnect (DCI).

- Intelligent Quantized Congestion Notification (iQCN): iQCN enables the forwarding device to intelligently detect network congestion. The iQCN-enabled forwarding device proactively sends CNPs to the sender based on the interval at which the receiver sends CNPs and the interval between rate increase events of the NIC of the sender. In this case, the sender can receive CNPs in a timely manner and will not increase its packet sending rate, preventing congestion from being exacerbated.

Traffic scheduling

Traffic scheduling maintains load balancing for service traffic and network links, ensuring the quality of different service traffic.

- Dynamic load balancing: When data packets are forwarded, the system dynamically selects a proper link based on the traffic bandwidth and the load of each member link to implement even traffic distribution. This prevents long delay or high packet loss due to heavy load on a link.

- Queue scheduling: controls traffic sending policies between different queues to provide differentiated quality assurance for traffic in different queues.

Integrated Network and Computing (INC)

In the traffic model of the HPC network, more than 80% of the traffic is packets whose payload is less than 16 bytes. This raises demanding requirements on the static latency. Typically, the static latency of an Ethernet chip is about 500 ns, and that of an IB chip is about 90 ns. The disadvantage of Ethernet in the static latency can be reduced or even eliminated through network-computing collaboration.

INC aggregates MPI communication data on network devices to ensure low latency in the HPC small-sized packet scenario and reduce the communication waiting time, thereby improving the computing efficiency.

Intelligent Lossless NVMe Over Fabrics (iNOF)

iNOF technology allows a device to quickly manage and control hosts, and applies intelligent lossless network technology to storage systems to implement the convergence of computing and storage networks.

Application Scenarios of Intelligent Lossless Networks

Distributed Storage

Traditional networks store data on centralized storage servers, which may become the bottleneck of system performance and a vulnerable point in terms of availability and security, failing to meet the requirements of large-scale storage applications.

A distributed storage system stores data on multiple independent devices and adopts a scalable system architecture. Multiple storage servers can share the storage load, and location servers can locate storage information. This improves the system scalability, reliability, availability, and access efficiency.

Centralized Storage

A centralized storage system is one set of storage system consisting of multiple devices. In terms of technical architectures, centralized storage can be classified into SAN and NAS. RoCEv2-powered intelligent lossless networks are well suited for transmission of large data blocks between servers and storage devices. They are applicable to:

- Database applications that have demanding requirements for response time, availability, and scalability

- Centralized backup that requires high performance, data consistency, and reliability

- High availability and failover, reducing costs and maximizing application performance

- Scalable storage virtualization, separation of the storage from directly connected servers, and dynamic storage partitioning

- Improved disaster tolerance, providing high performance and extended distance of Fibre Channel between the servers and connected devices

HPC

Computing power is aggregated to perform data-intensive computing tasks, such as emulation, modeling, and rendering, which are beyond the capacity of standard workstations. In traditional network-intensive HPC scenarios, computing tasks are completed on the server side, and the network side is only responsible for data forwarding. In scenarios where the intelligent lossless network and HPC are converged, dedicated or high-end switching devices are used to accelerate collective communication or integrate the computing capabilities of multiple units. This effectively improves the efficiency of collective communication and reduces the total job completion time.

Machine Learning

Machine learning is a way to implement AI. Unlike traditional software programs that are hard-coded to resolve specific tasks, machine learning extracts a large number of samples through multi-layer neural networks, parses data based on deep learning algorithms, and continuously learns and adjusts parameters. In this way, it makes predictions and decisions about events in the real world. To improve the computing capability of AI applications, distributed AI clusters are used for AI training.

With iLossless technologies, the intelligent lossless network solves the problem of packet loss caused by congestion on the traditional Ethernet in machine learning scenarios. In addition to zero packet loss, it achieves the maximum throughput and minimum latency, meeting the high performance requirements of machine learning applications.

- Author: Zhao Qingqing

- Updated on: 2021-09-30

- Views: 6075

- Average rating: