What Is a Hyper-Converged Data Center Network?

A DCN connects general-purpose computing, storage, and HPC resources in a data center (DC). All data exchanged between servers is transmitted over the DCN. Currently, the IT architecture, computing, and storage technologies are undergoing significant changes, driving the DCN to evolve from multiple independent networks to one single Ethernet network. Traditional Ethernet networks cannot meet the storage and HPC service requirements. A hyper-converged DCN is a new type of DCN, which is built on one lossless Ethernet network that can carry general-purpose, storage, and HPC services. It can implement full-lifecycle automation and network-wide intelligent O&M.

- Why Hyper-Converged Data Center Network?

- What Are the Core Indicators of a Hyper-Converged Data Center Network?

- What Are the Differences Between a Hyper-Converged Data Center Network and the HCI?

- What Is Huawei's Hyper-Converged Data Center Network Solution?

- What Are the Benefits of Huawei's Hyper-Converged Data Center Network?

- How Does Huawei's Hyper-Converged Data Center Network Work?

Why Hyper-Converged Data Center Network?

As-Is: Three Independent Networks in a DC

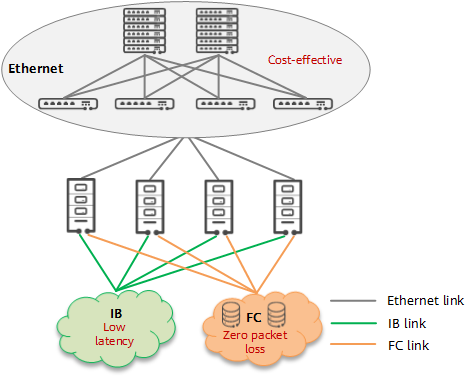

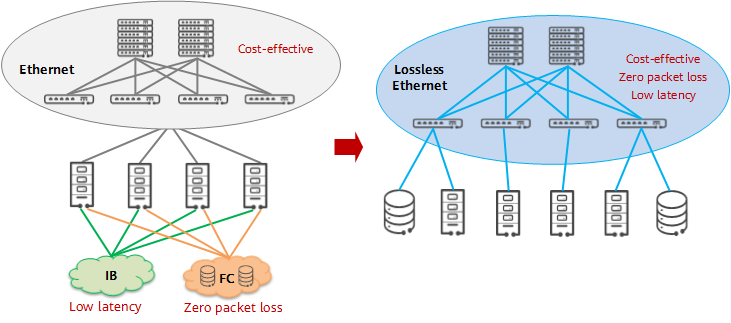

There are three types of typical services in a DC: general-purpose computing, HPC, and storage services. Each type of service has different requirements on the network. For example, the communication between multiple nodes of HPC services has high requirements on latency; storage services require high reliability and zero packet loss; general-purpose computing services require a cost-effective and easy-to-expand network to meet their requirements of large scale and high scalability.

To meet the preceding requirements, three independent networks are deployed in a DC:

- InfiniBand (IB) network: carries HPC services.

- Fibre Channel (FC) network: carries storage services.

- Ethernet network: carries general-purpose computing services.

Three independent networks in a DC

Challenge 1 in the AI Era: Network Becomes a Bottleneck Due to Significant Improvement in Storage and Computing Capabilities

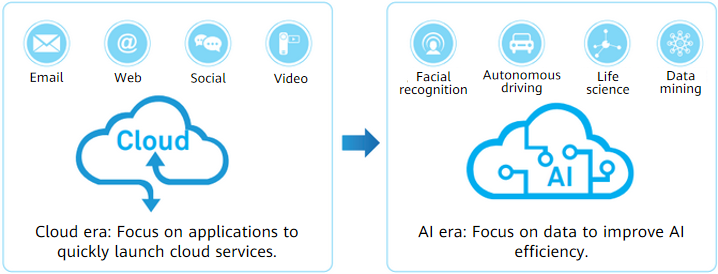

A large amount of data is generated during enterprise digitalization, and such data is becoming core assets of enterprises. Mining values from enormous data through artificial intelligence (AI) is crucial to enterprises in the AI era. AI-powered machine learning and real-time decision-making based on various data have become core tasks of enterprise operations. Compared with the cloud computing era, the focus of enterprise DCs in the AI era is shifting from fast service provisioning to efficient data processing.

DCs evolving towards the AI era

To improve the efficiency of processing massive data through AI, revolutionary changes are taking place in the storage and computing fields:

- Storage media evolves from hard disk drives (HDDs) to solid-state drives (SSDs) to meet real-time data access requirements, reducing the latency of storage media by more than 99%.

- To meet the requirements for efficient data computing, the industry has used GPUs or even dedicated AI chips to improve the data processing capability by more than 100 times.

With the significant improvement of storage media and computing capabilities, the network latency has become a bottleneck for improving the overall application performance in a high-performance DC cluster system. The network latency increases from 10% to more than 60% of the end-to-end latency. That is, more than half of the latency for storage or computing resources is caused by network communication.

With the evolution of storage media and computing processors, low network efficiency hinders the improvement of computing and storage performance. The overall application performance can be improved only when the communication latency is reduced to a level close to that of computing and storage.

Challenge 2 in the AI Era: RDMA Replacing TCP/IP Becomes an Inevitable Trend, but the RDMA Network Bearer Solution Has Demanding Requirements

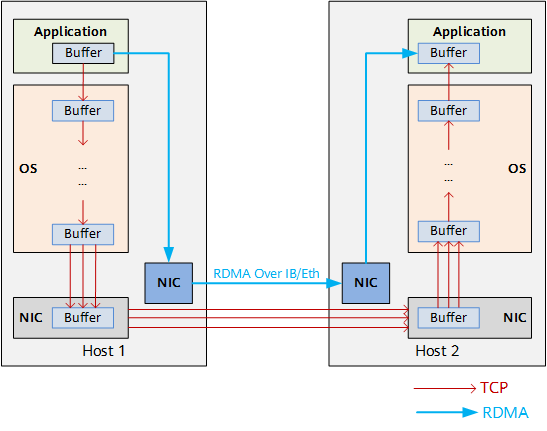

As shown in the following figure, the TCP protocol stack will cause a latency of tens of microseconds when receiving or sending packets and processing packets within servers. As a result, in systems with the latency of microseconds such as AI data computing and SSD distributed storage systems, the latency of the TCP protocol stack becomes the most obvious bottleneck. In addition, with the expansion of the network scale and the increase of the bandwidth, more and more CPU resources are consumed for data transmission.

Remote Direct Memory Access (RDMA) allows direct data read and write between applications and network interface cards (NICs), reducing the data transmission latency within servers to about 1 microsecond. In addition, it allows receivers to directly read data from the memory of transmitters, which greatly reduces the CPU load.

Comparison between RDMA and TCP

According to the service test data, RDMA improves the computing efficiency by 6–8 times. The transmission latency of about one microsecond within a server makes it possible for the SSD distributed storage latency to be shortened from milliseconds to microseconds. In the latest Non-Volatile Memory express (NVMe) interface protocol, RDMA has become a mainstream network communication protocol stack. Therefore, replacing TCP/IP with RDMA has become an inevitable trend.

There are two solutions for carrying RDMA on the interconnection network between servers: dedicated IB network and traditional IP Ethernet network. However, they both have disadvantages.

- IB network: It uses a closed architecture and proprietary protocols, making it difficult to interconnect with legacy large-scale IP networks. Complex O&M and dedicated O&M personnel result in high operating expense (OPEX).

- Traditional IP Ethernet network: For RDMA, a packet loss rate greater than 10-3 causes a sharp decrease in network throughput, and a packet loss rate of 2% causes zero RDMA throughput. To prevent the RDMA throughput from being affected, the packet loss rate must be less than 1/100000 or even as low as 0. On a traditional IP Ethernet network, packets will be discarded upon congestion. The traditional IP Ethernet network uses the PFC and ECN mechanisms to avoid packet loss. These mechanisms use backpressure signals to ensure zero packet loss by reducing the transmission rate, which does not improve the throughput.

Therefore, efficient RDMA must be carried over an open Ethernet network with zero packet loss and high throughput.

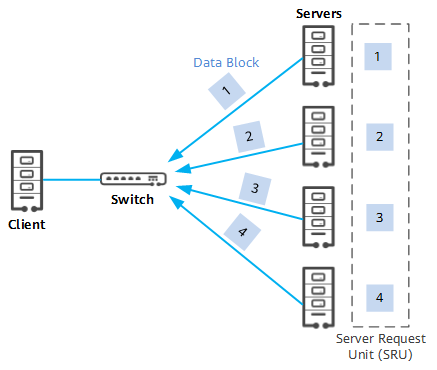

Challenge 3 in the AI Era: Distributed Architecture Becomes the Trend, Aggravating Network Congestion and Driving Network Transformation

In the digital transformation of enterprises such as financial and Internet enterprises, a large number of application systems are migrated to distributed systems. Against this backdrop, a large number of PCs are used to replace midrange computers, bringing benefits such as low cost, high scalability, and high controllability. This also poses challenges to network interconnection:

- The distributed architecture requires a large amount of communication between servers.

- Due to Incast traffic (multipoint-to-point traffic), bursts occur on the receiver and exceed the interface capability of the receiver instantaneously, causing congestion. As a result, packet loss occurs.

Traffic model of the distributed architecture - With the increasing complexity of distributed system applications, the size of packets exchanged between servers keeps increasing. Such large packets further aggravate network congestion.

What Are the Core Indicators of a Hyper-Converged Data Center Network?

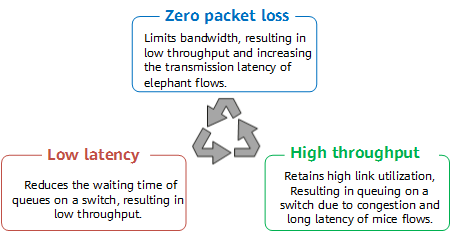

Zero packet loss, low latency, and high throughput are the three core indicators of the next-generation DCN to meet the efficient data processing requirements in the AI era and cope with the challenges of the distributed architecture. These three core indicators affect each other, imposing great challenges to implement the optimal performance for all of them.

Three core indicators affecting each other

The core technology for achieving zero packet loss, low latency, and high throughput is the congestion control algorithm. Data Center Quantized Congestion Notification (DCQCN), which is the universal lossless network congestion control algorithm, requires collaboration between NICs and networks. Each node needs to be configured with dozens of parameters, and hundreds of thousands of parameters are involved on the entire network. To simplify the configuration, only common configurations can be used. As a result, the three core indicators cannot be all achieved in different traffic models.

What Are the Differences Between a Hyper-Converged Data Center Network and the HCI?

Hyper-converged infrastructure (HCI) is a set of unit devices that have computing, network and storage resources and support server virtualization technology. Multiple sets of such unit devices can be aggregated through a network to implement seamless scale-out and form a unified resource pool.

HCI integrates virtual computing and storage resources into one system platform. In essence, the virtualization software Hypervisor runs on physical servers, and the distributed storage service runs on the virtualization software for virtual machines (VMs) to use. The distributed storage service can run on a VM or a module integrated with the virtualization software. HCI can integrate computing and storage resources as well as networks and other platforms and services. Currently, it is widely recognized in the industry that the software-defined distributed storage layer plus virtual computing is the minimum set of the HCI architecture.

Different from HCI, a hyper-converged DCN focuses only on the network layer and provides a brand-new network layer solution that interconnects computing and storage. On a hyper-converged DCN, computing and storage resources do not need to be reconstructed or converged. In addition, the hyper-converged DCN can be quickly expanded at low costs based on the Ethernet.

What Is Huawei's Hyper-Converged Data Center Network Solution?

Based on years of successful DCN practices, Huawei has developed different traffic characteristic models to cope with dynamic traffic and adjust massive parameters. Traffic characteristics and network status are collected from switches in real time, and the innovative iLossless algorithm is used to make decisions and dynamically adjust network parameter settings in real time. In this way, the switch buffer is efficiently used, and no packet loss occurs on the entire network. Based on the Clos networking model, Huawei uses CloudEngine series switches to build a hyper-converged DCN with the spine-leaf intelligent architecture. The architecture integrates computing and network intelligence as well as global and local intelligence, achieving low latency and zero packet loss.

In addition, Huawei's intelligent analysis platform iMaster NCE-FabricInsight uses the AI algorithm to predict the future traffic model based on the traffic characteristics and network status data collected globally. iMaster NCE-FabricInsight corrects parameter settings of NICs and networks in real time to meet requirements of applications.

Based on an open Ethernet network, Huawei's hyper-converged DCN uses unique AI algorithms to meet requirements of low cost, zero packet loss, and low latency for the Ethernet network. The hyper-converged DCN is the best choice for DCs in the AI era to build a unified and converged network architecture.

Transformation from independent networking to converged networking

What Are the Benefits of Huawei's Hyper-Converged Data Center Network?

Traditional FC and IB private networks are expensive and closed, require dedicated O&M, and do not support software-defined networking (SDN), failing to meet automatic deployment requirements such as cloud-network synergy.

Huawei's hyper-converged DCN offers the following benefits:

- Improving E2E service performance

According to the EANTC test result, Huawei's hyper-converged DCN can reduce the computing latency by 44.3% in HPC scenarios, improve the input/output operations per second (IOPS) by 25% in distributed storage scenarios, and ensure zero packet loss in all scenarios.

Huawei's hyper-converged DCN can provide 25GE, 100GE, and 400GE networking, meeting the network bandwidth requirements of massive data in the AI era.

- Reducing costs and improving efficiency

Among the investment in DCs, the proportion of network devices is only 10%, while the proportion of servers and storage devices reaches 85%. Huawei's hyper-converged DCN can improve the storage performance by 25% and computing efficiency by 40%, which will bring return on investment (ROI) several ten folds.

- SDN automation and intelligent O&M

Huawei's hyper-converged DCN supports full-lifecycle service automation for SDN cloud-network synergy, reducing OPEX by at least 60%. In addition, Huawei's hyper-converged DCN is essentially an Ethernet network. Therefore, traditional Ethernet O&M personnel can manage the network. They can perform multi-dimensional and visualized network O&M on Huawei's intelligent analysis platform iMaster NCE-FabricInsight. For details, see CloudFabric.

How Does Huawei's Hyper-Converged Data Center Network Work?

Currently, an Ethernet network is used to carry RDMA traffic through the RDMA over Converged Ethernet version 2 (RoCEv2) protocol. Huawei's hyper-converged DCN uses the iLossless algorithm, a collection of a series of technologies, to build a lossless Ethernet network. The technologies cooperate with each other to solve the packet loss problem caused by congestion on traditional Ethernet networks and provide a network environment with zero packet loss, low latency, and high throughput for RoCEv2 traffic to meet high-performance requirements of RoCEv2 applications.

- Flow control technology

End-to-end flow control is used to suppress the data transmission rate of the traffic sender so that the traffic receiver can receive all packets, thereby preventing packet loss when the traffic receiving interface is congested. Huawei provides PFC deadlock detection and PFC deadlock prevention mechanisms to prevent PFC deadlocks.

- Congestion control technology

Congestion control is a global process that enables the network to bear existing traffic load. To mitigate and relieve congestion, the forwarding device, traffic sender, and traffic receiver need to collaborate with each other, and congestion feedback mechanisms need to be used to adjust traffic on the entire network. Huawei provides Artificial Intelligence Explicit Congestion Notification (AI ECN), Intelligent Quantized Congestion Notification (iQCN), ECN Overlay, and Network-based Proactive Congestion Control (NPCC) functions to solve the problems of traditional DCQCNs.

- iNOF technology

To better serve storage systems, Huawei provides the Intelligent Lossless NVMe Over Fabrics (iNOF) function to quickly manage and control hosts.

For details about the fundamentals and configuration procedure of the preceding functions, see "Configuration > Configuration Guide > Intelligent Lossless Network Configuration" in Huawei CloudEngine Series Switch Product Documentation.

- Author: Zhang Fan

- Updated on: 2023-12-01

- Views: 4972

- Average rating: