Computing Network

As the data economy surges, the amount of data generated is expected to reach unprecedented levels. To process all these data, a strong cloud-edge-device computing power and a wide-coverage connected network will be required. A computing network is a new information infrastructure that allocates and flexibly schedules computing, storage, and network resources among the cloud, edge, and device on demand.

What Is Computing Power?

Computing power is the capability in computing.

Definition of computing power

As the construction of digital infrastructure gains momentum, the entire industry is pursuing higher and more inclusive computing power. Undoubtedly, computing power has attracted unprecedented attention. So, what exactly is computing power?

Computing power refers to the computing capability of a device. Without computing power, software and hardware in devices such as mobile phones, PCs, and even supercomputers would be unable to function properly. Take a PC as an example. Higher CPU, graphics card, and memory specifications will enable the PC to deliver higher computing power.

Computing power is the computing capability of a device

Computing power measurement

In the big data era, data and computing power are both immense. Here is what each of the related units represents: K (Kilo) indicates 103, M (Mega) indicates 106, G (Giga) indicates 109, T (Tera) indicates 1012, P (Peta) indicates 1015, E (Exa) indicates 1018, Z (Zetta) indicates 1021, and Y (Yotta) indicates 1024.

A computing power unit is an indicator and benchmark for measuring the computing power. Currently, there are multiple methods used for measurement. Common ones include Million Instructions Per Second (MIPS), Dhrystone Million Instructions Per Second (DMIPS), Operations Per Second (OPS), Floating-point Operations Per Second (FLOPS), and Hash/s (Hash Per Second).

FLOPS has always been one of the main indicators for measuring the computing speed of a computer. At present, the computing speed of a PC is at the GFLOPS level. For example, the computing speeds of China's supercomputer Sunway TaihuLight and Pengcheng Cloud Brain II (based on Huawei Atlas 900 cluster) from Pengcheng Lab are about 93.015 PFLOPS and 1000 PFLOPS, respectively. 1000 PFLOPS is equal to the computing power of tens or even hundreds of millions of PCs.

Let's take another example with Hash/s. The number of hash collisions that a mining machine can perform per second to obtain Bitcoins represents its computing power. The ratio of the mining machine computing power owned by a miner to the total computing power of the entire Bitcoin network (total computing power of all mining machines participating in mining) represents the probability that the miner can win the mining competition. The computing power of a PC today is at the GHash/s level. Currently, the total computing power of the Bitcoin network has reached 200 EHash/s (fluctuating daily). Based on this, the rate of a PC succeeds in mining is 1/10 billion.

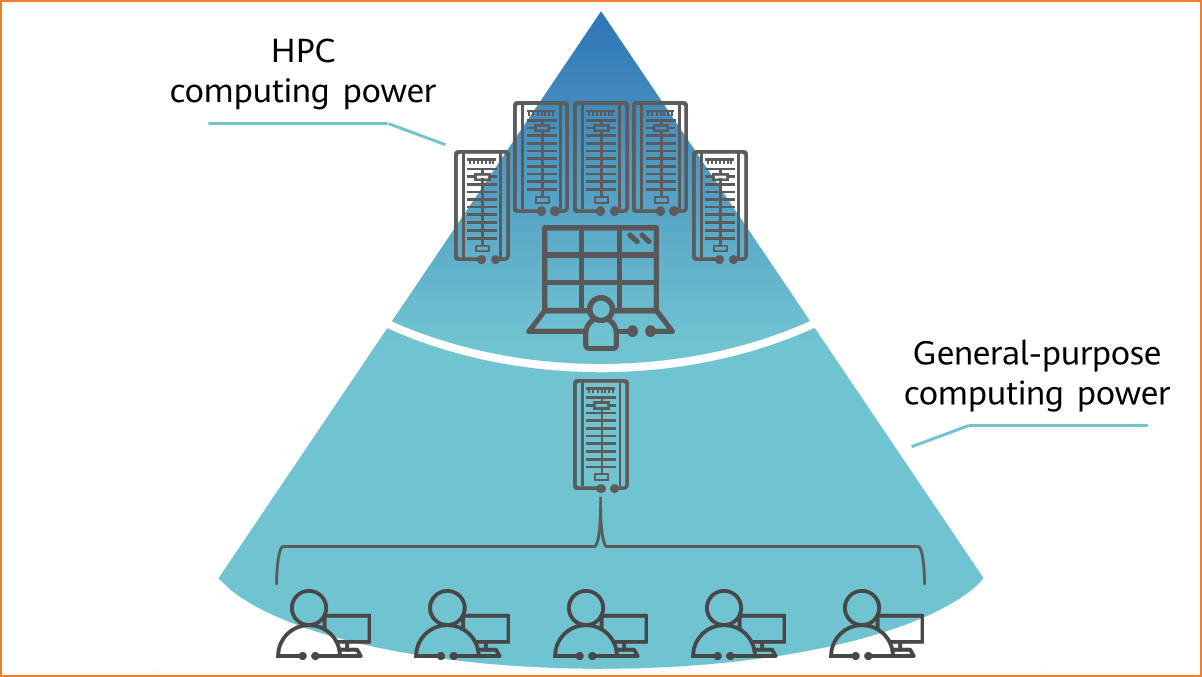

Classification of computing power

The computing power is classified into the following types based on application fields:

- General-purpose computing power, featuring smaller computing volumes, limited power consumption, and common application scenarios.

- High-performance computing (HPC) power, featuring larger computing volumes. A single task might invoke a huge number of computing resources. HPC is a computer cluster system that connects multiple computer systems using various interconnection technologies and utilizes the integrated computing capability of all connected systems to process large-scale computing tasks. Therefore, HPC is also called high-performance computing cluster.

HPC and general-purpose computing power

The HPC computing power is classified into the following types based on application fields:

- Scientific computing: physics and chemistry, meteorology and environmental protection, life sciences, oil exploration, astronomical exploration, etc.

- Engineering computing: computer-aided engineering and manufacturing, electronic design automation, electromagnetic simulation, etc.

- Intelligent computing: artificial intelligence (AI) computing, including machine learning, deep learning, and data analysis.

As we enter the intelligent era and witness a surging demand for AI computing power, AI utilized in scientific and engineering computing represents the trend. For that, computing power is classified into general-purpose computing power and AI computing power.

Why is computing power important?

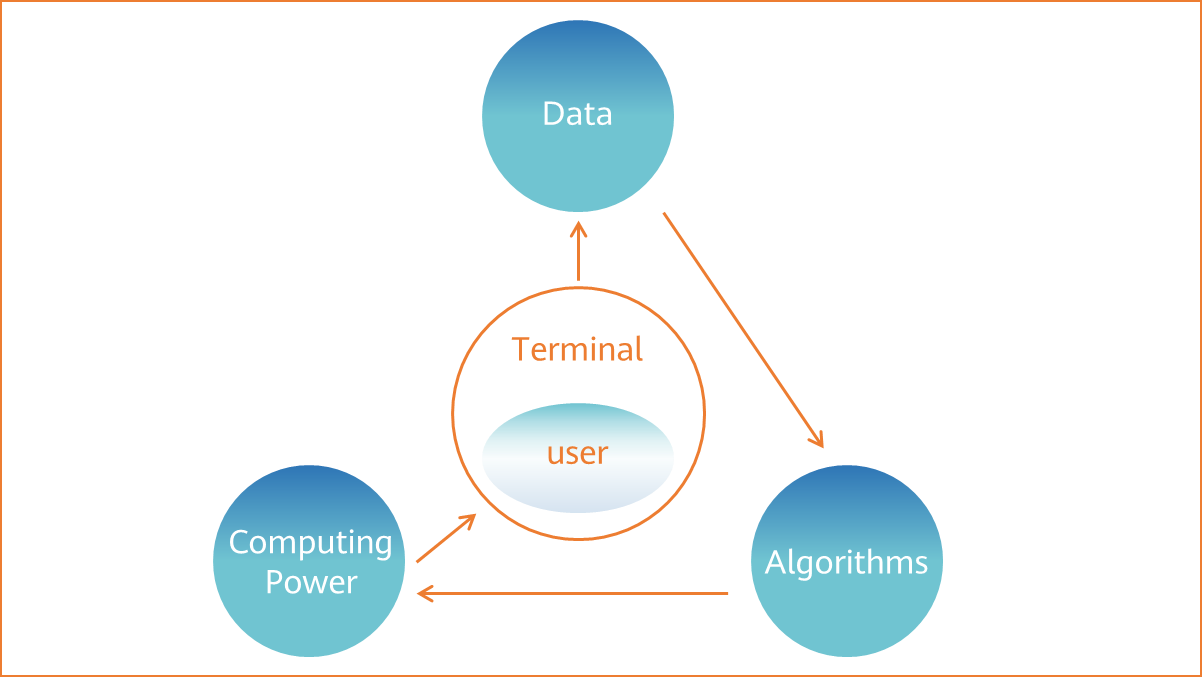

Three elements constructing an intelligent world

An intelligent world is formed by knowledge and intelligence. Similarly, intelligence in the digital world is translated as data, computing power, and algorithms.

Three elements constructing an intelligent world

Algorithms need to be researched by scientists by using massive data obtained from people and things in various industries. Data processing requires a large amount of computing power, which is the basic platform for intelligence and consists of numerous computing devices.

Rising demand for computing power

According to Computing 2030 released by Huawei, we will enter the YB data era in 2030 when the global data volume increases by 1 YB each year. The general-purpose computing power will increase 10-fold to 3.3 ZFLOPS, and the AI computing power will increase 500-fold to reach in excess of 100 ZFLOPS, which is at the 1023 level, equivalent to the computing power of a million Sunway TaihuLights (China's supercomputer).

And these data and computing power requirements in the future will mainly come from diversified computing scenarios.

Future computing scenarios

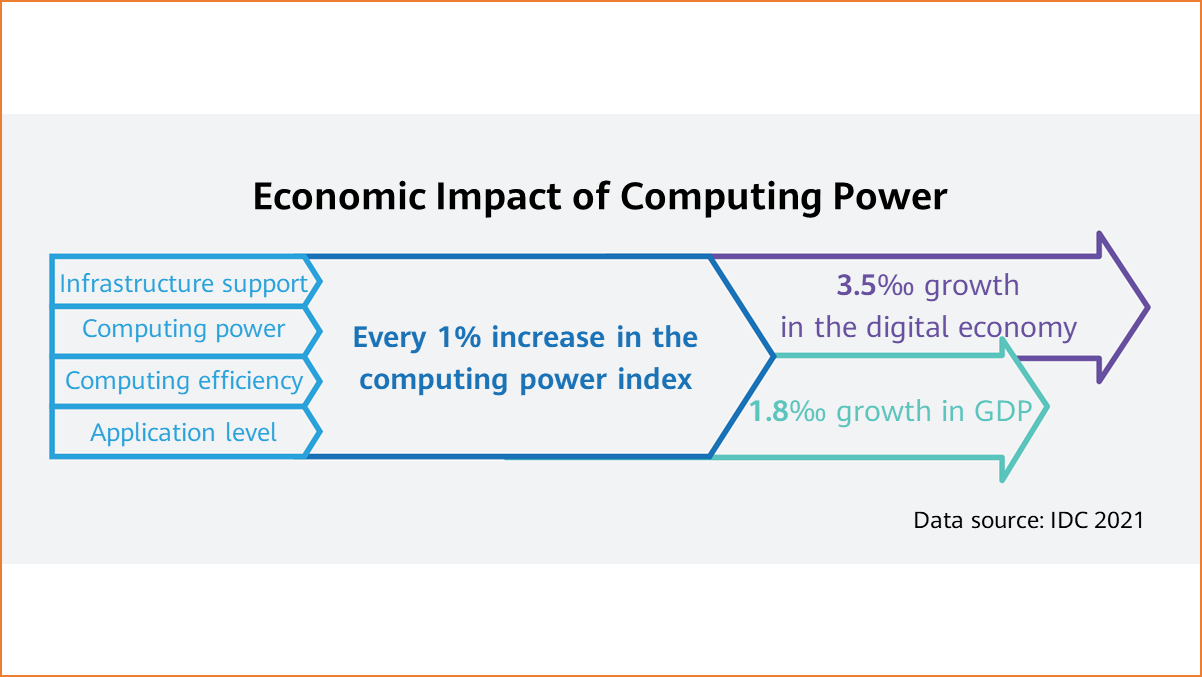

Computing power generating productivity

According to the 2021-2022 Global Computing Power Index Assessment Report jointly released by Inspur, IDC, and Tsinghua University on March 17, 2022, the proportion of the digital economy is expected to reach 41.5% by 2025 driven by the continuous development of the global digital economy. At the same time, the national computing power index and GDP trends show a notable positive correlation. Every 1% increase in the computing power index of 15 key countries will lead to 3.5‰ growth in the digital economy and 1.8‰ growth in GDP. This trend is expected to continue from 2021 to 2025.

Economic impact of computing power

In addition, when the computing power index of a country reaches 40 or above, the driving force of the country's GDP growth will increase by 1.5 times for every 1% increase in the computing power index. This increase jumps to 3 times when the country's computing power index reaches 60 points or above, boosting economic growth in a more significant way.

Therefore, in the digital economy era, computing power has become the core engine that drives national economic growth. To improve a country's computing power is to stimulate its economic development. The higher the computing power index, the more evident the economic growth.

Why Is Networking the Computing Power Necessary?

Computing power is ubiquitously distributed

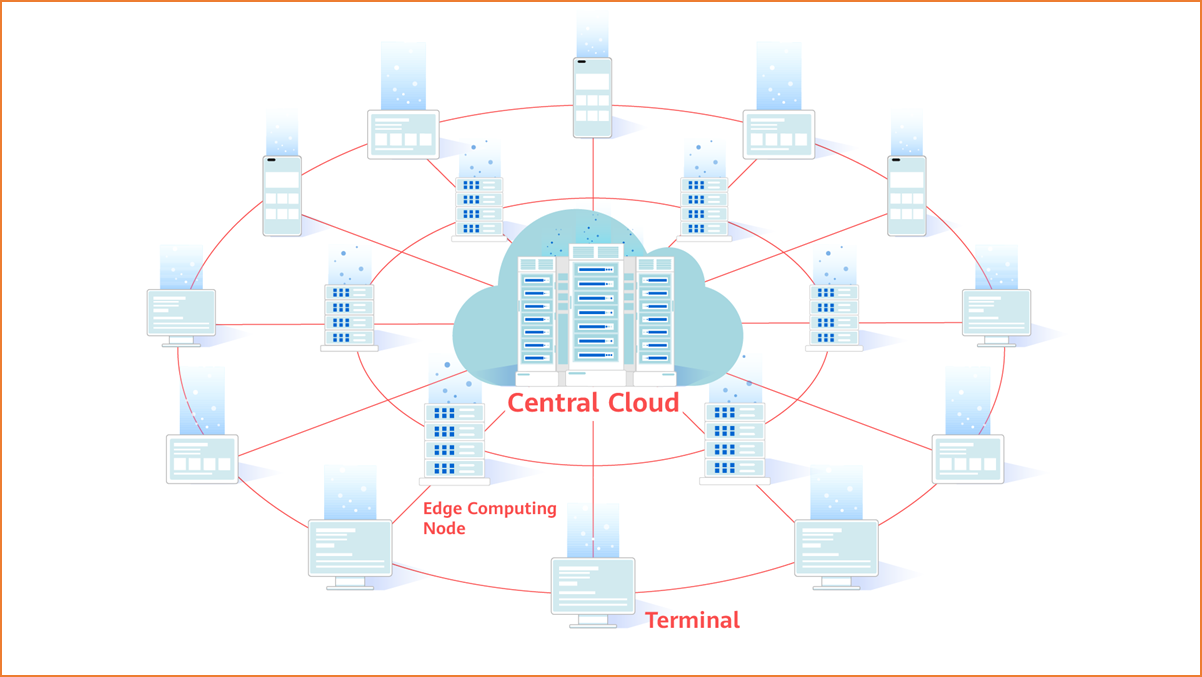

Evolving from the cloud to ubiquitous distribution, computing infrastructure extends from a central point to edges and devices, forming a three-level computing architecture comprised of cloud, edges, and devices.

Cloud-edge-device computing architecture

The "central point" here refers to a cloud computing data center. Cloud computing is a cloud-network-based super computing mode. In a remote data center, thousands of computers and servers are connected and together they form a computing cloud. Various industries and individuals access the cloud computing data center through the network and perform data storage and computing as and when required. Cloud computing is classified into public cloud, private cloud (such as communication cloud), and hybrid cloud by deployment type. Different clouds correspond to different user groups.

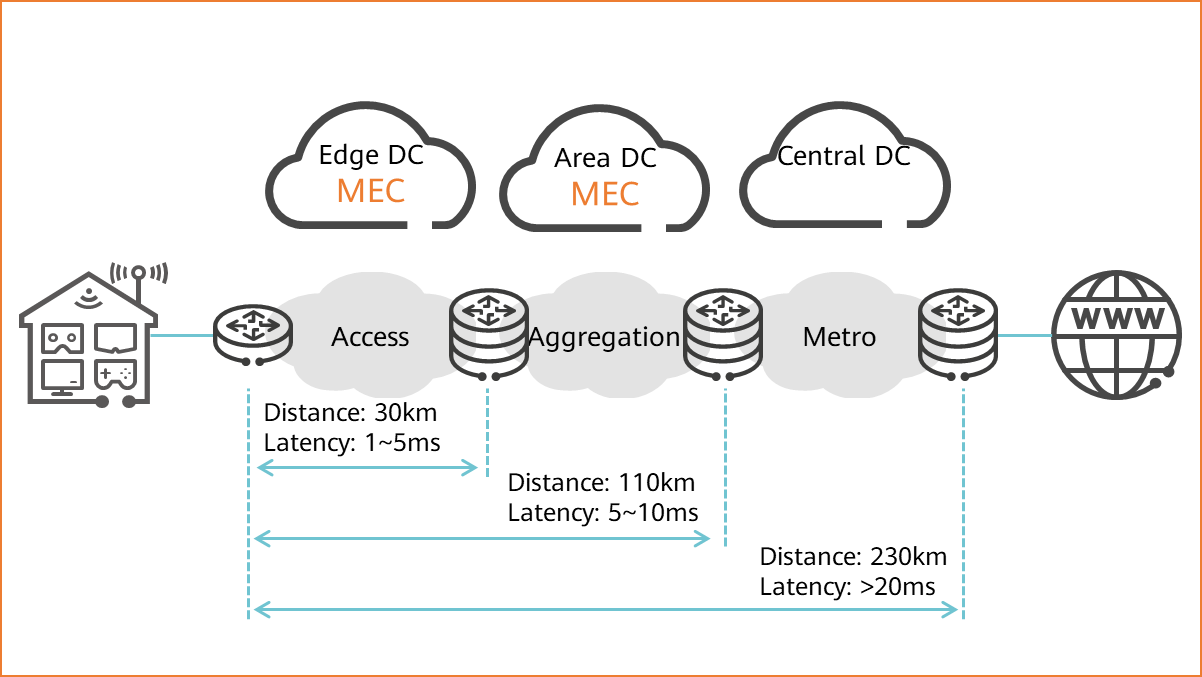

The edge refers to multi-access edge computing (MEC), which is relative to cloud computing. Cloud computing uploads all data to the cloud data center where computing resources are centralized for processing and each access request must be sent to the cloud. Consequently, the disadvantages of the traditional cloud computing become more noticeable when spikes in the IoT data volume occur.

- Massive data processing requirements cannot be met. With the convergence of the Internet and various industries, especially since IoT technologies have gained popularity, computing requirements increase explosively. The traditional cloud computing architecture cannot meet such huge computing requirements.

- Real-time data processing requirements cannot be met. IoT data, after being collected by terminals, needs to be transmitted to the cloud computing center, and the result is then returned after cluster computing. This inevitably leads to a long response time. However, some emerging application scenarios, such as autonomous driving and smart mining, require short response time, making traditional cloud computing impractical.

To some extent, edge computing can solve these problems. Data generated by IoT terminals does not need to be transmitted to remote cloud data centers for processing. Instead, data is analyzed and processed at the nearest network edge, which is more efficient and secure. Edge computing can also be performed by the cloud, that is, the regional cloud or the edge cloud.

Edge computing

In the future, the edge computing power will be greater than the central computing power.

The device side refers to all devices that have networking and computing capabilities, such as PCs, mobile phones, smart TVs, home STBs, and smart water and electricity meters. In the IoT era, huge numbers of terminals will be connected to the network to aggregate the existing computing power of idle devices. This is how computing power sharing works. In this sense, computing power is ubiquitous.

Computing power requiring network scheduling

Due to the ubiquitous evolution of the cloud-edge-device computing architecture, the computing power will not be concentrated in the data center. Instead, it will be widely distributed at the edge or device side.

These computing nodes need to be interconnected through a network so that their computing resources can be shared, scheduled, used, and coordinated.

As mentioned in the China Mobile Computing Network White Paper, hydropower cannot be divorced from the water network, electric power cannot be transmitted without the power grid, and computing power development cannot be achieved without the computing network. As a new infrastructure, the computing network should provide social-level services featuring global access and instant availability to put together the implementation of ubiquitous network, computing power, and intelligence.

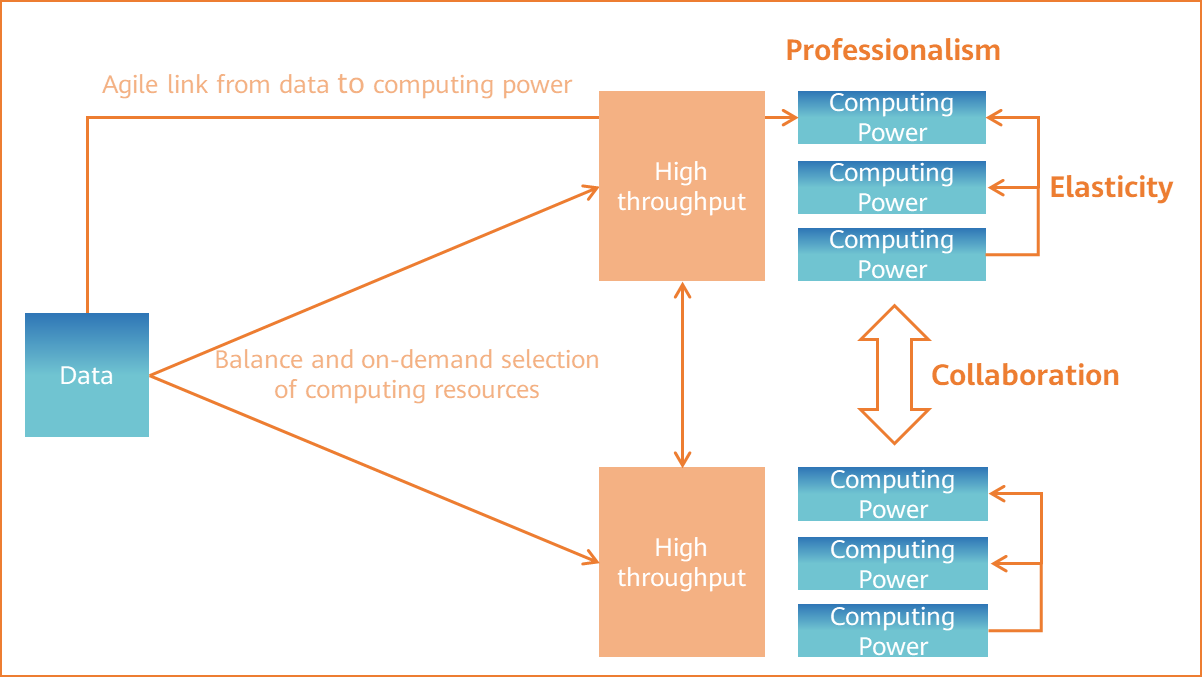

China Unicom Computing Network White Paper also mentions that computing networks are required to implement efficient scheduling of cloud-edge-device computing power. Specifically, efficient computing power must have three key elements to achieve high throughput, agile connection, and balanced on-demand selection of data and computing power. These three elements, described below, can be connected only through a network.

- Professionalism: Focusing on dedicated scenarios and completing more computing workload with lower power consumption and costs. For example, analyzing and processing videos at the edge with high data throughput.

- Elasticity: Processing data more elastically. The network provides agile connection establishment and adjustment capabilities between data requirements and computing resources.

- Collaboration: Fully utilizing resources in collaboration between multiple cores in a processor, computing power balancing among multiple servers in a data center, and on-demand computing power at the edge of the entire network.

Three elements ensuring efficient computing power

What Is a Computing Network?

Definition of a computing network

Technological innovations such as the Internet, big data, cloud computing, AI, and blockchain have promoted the development of the digital economy. As the data economy surges, the amount of data generated is expected to reach unprecedented levels. To process all these data, a strong cloud-edge-device computing power and a wide-coverage connected network will be required.

A computing network is a new information infrastructure that allocates and flexibly schedules computing, storage, and network resources among the cloud, edge, and device based on service requirements. Simply put, it is a computing resource service. In the future, the ability to flexibly reschedule computing tasks to appropriate places will be equally important as the network and cloud are to enterprise customers or individual users.

A network exists to improve efficiency. For example, the telephone network facilitates efficient communication, and the Internet facilitates efficient connections. And now the computing network has emerged to improve the collaboration efficiency of cloud-edge-device computing. Unlike traditional networks that serve us directly, the computing network benefits us indirectly as it serves intelligent machines.

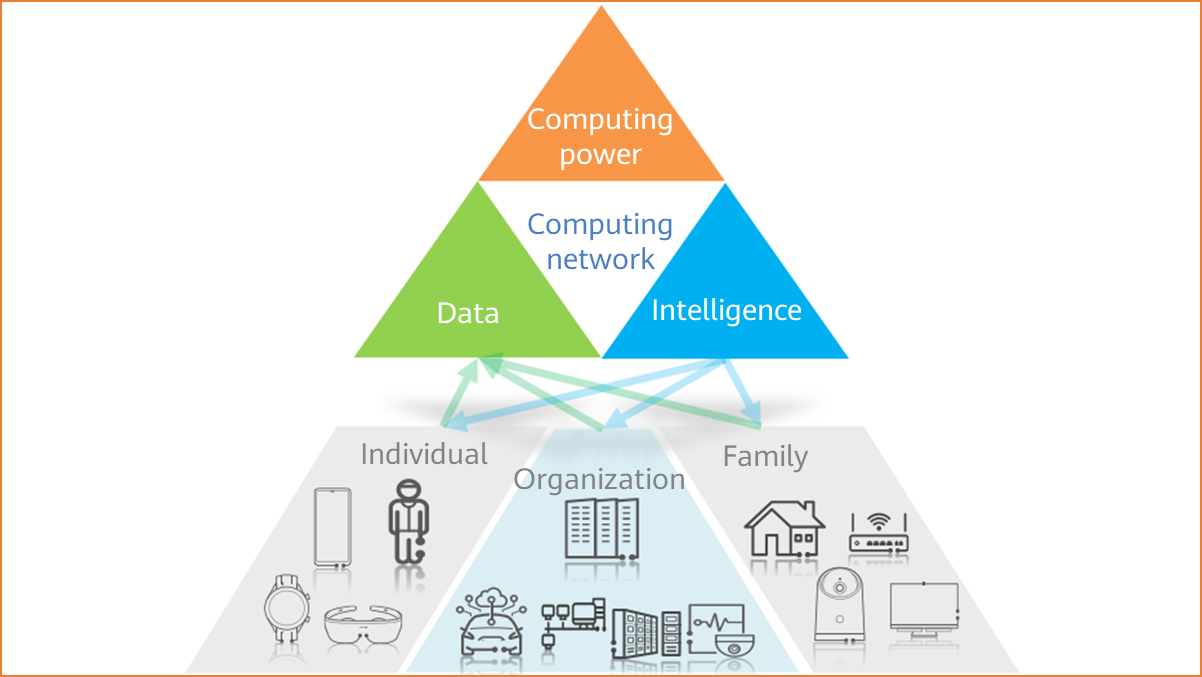

The computing network builds interconnection between massive data, efficient computing power, and ubiquitous intelligence, bringing across-the-board intelligence to each person, home, and organization.

Building an interconnection network between data, computing power, and intelligence

The Communications Network 2030 released by Huawei shares similar views: The computing network reveals an important shift in the network design concept from human-oriented cognition to machine-oriented cognition (AI), connecting massive user data and multi-level computing power services.

A computing network connects central computing nodes distributed at different geographical locations through new network technologies. The purpose is to perceive the status of computing resources in real time, coordinate the allocation and scheduling of computing tasks, and transmit data to form a global network that senses, allocates, and schedules computing resources. On this network, computing power, data, and applications can be aggregated and shared.

Computing centers have multiple layers and management domains. Different computing centers differ greatly. The types of applications deployed, datasets stored, and computing structures employed may vary from center to center. Management policies, billing standards, and carbon emissions standards may also vary. There are several challenges to be addressed if we are to build a computing network: coordination between different computing centers; a reliable transaction and management mechanism for computing power, data, and applications; and a lack of unified standards. The ultimate goal is to build computing architecture that is open, energy efficient, and with high resource utilization.

One huge national computing network

The computing network is not simply a network that connects all computing nodes. In fact, it enables the computing power of all computing nodes to be aggregated into a computing power pool, thereby implementing global access and instant availability.

Functions of the computing network

The computing network used to have different names, such as Computing-aware Network, Computing First Network, Computing Force Network, and Computing Power Network.

Regardless of the name, the computing network, as an emerging concept itself, is all about computing power resource scheduling, which needs a corresponding computing power resource scheduling algorithm. And this algorithm has two basic dimensions: computing and network.

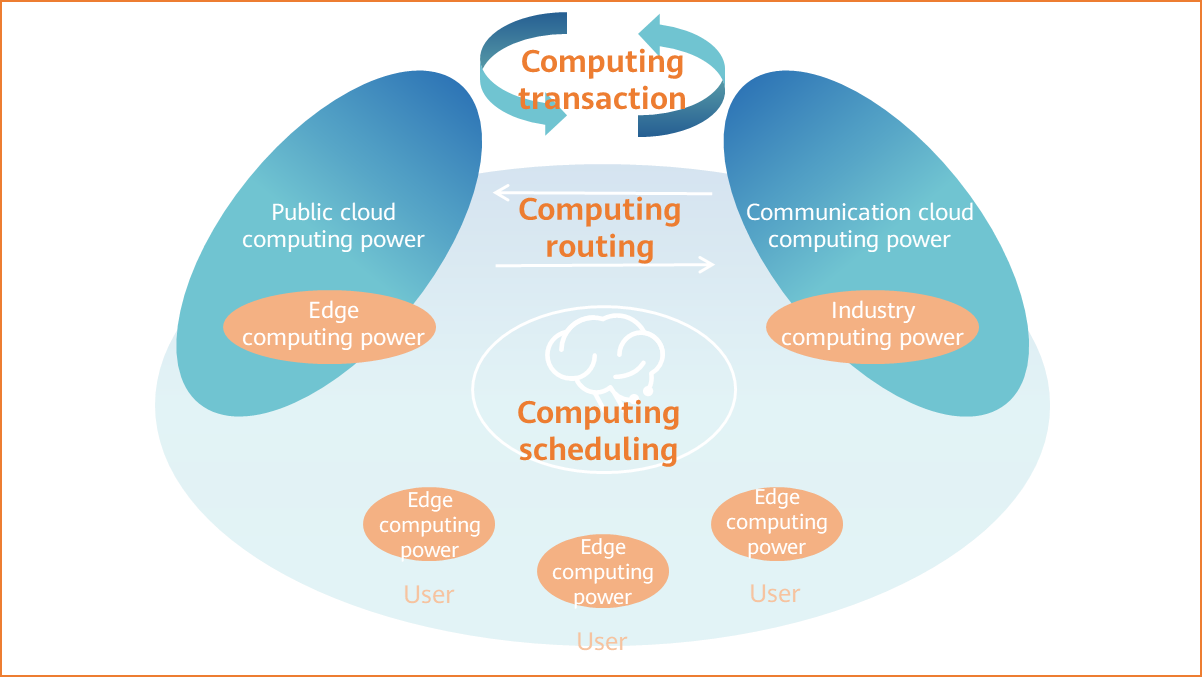

New factors such as 5G, edge computing, AI, and blockchain have brought new variables that need to be comprehensively considered by the algorithm. And this is where the three major functions of the computing network come from.

- Computing routes: The network can sense the computing power and provide the optimal routes for computing.

- Computing scheduling: The computing network brain intelligently orchestrates and elastically schedules computing power resources on the entire network.

- Computing transaction: a blockchain-based trusted computing power and network transaction platform.

Computing scheduling, computing routing, and computing transaction

Components of the computing network

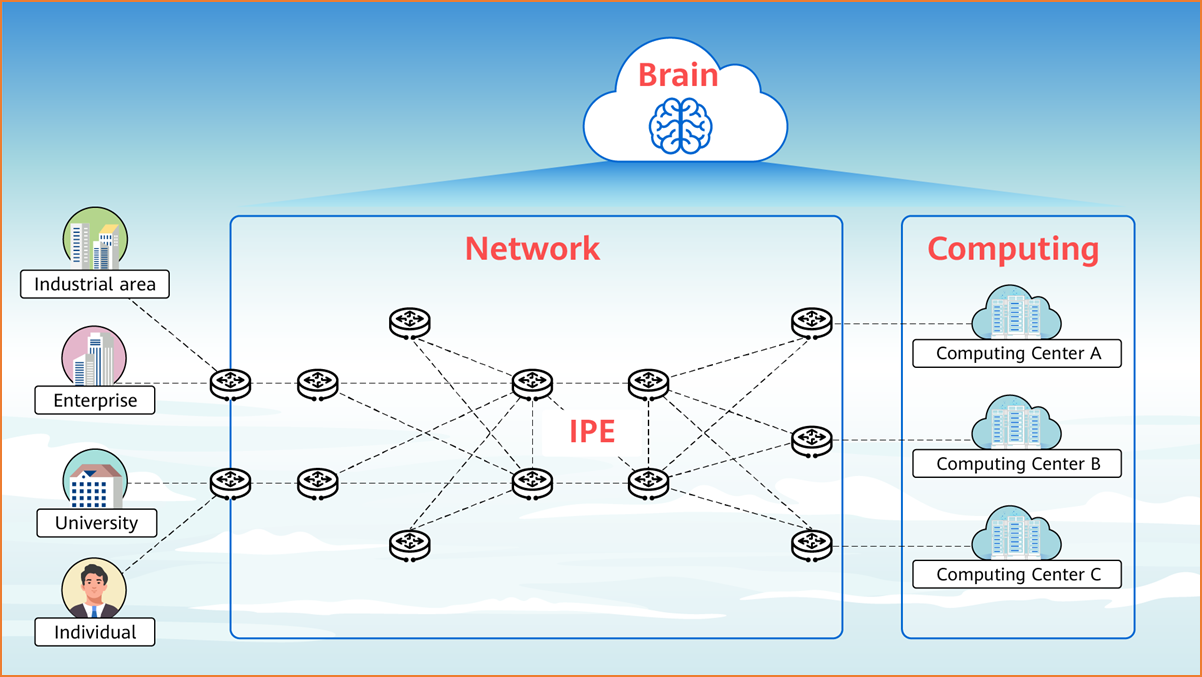

As shown in Figure 1-13, the components involved in the computing network include computing and the network itself, along with the brain.

- Computing produces computing power.

- The network connects computing power.

- The brain perceives, orchestrates, schedules, and collaborates computing power on the network.

Specifically, the brain has the following features:

- Visibility: All-domain situational awareness is supported. This makes it possible to obtain real-time computing, network, and data resources in all domains as well as the distribution of clouds, edges, and devices, thereby building an all-domain situational awareness map.

- Scheduling: Cross-domain collaborative scheduling is supported. This intelligently and automatically divides multi-domain collaborative scheduling tasks between each enablement platform to schedule computing, network, and data resources.

- Orchestration: Multi-domain convergent orchestration is supported. This flexibly combines and orchestrates the atomic capabilities of computing, network, and data based on multi-domain convergent service requirements.

- Intelligence: Intelligent decision-making assistance is supported. This intelligently and dynamically calculates the optimal collaboration policy for computing, network, and data based on factors such as SLA requirements of different services, overall network load, and available computing resource pool distribution.

A computing network is like a supercomputer. It first aggregates the computing power of the entire network, and then allocates data to each computing unit of the supercomputer.

Interconnection of computing networks

The computing network works to ensure that the same user experience is delivered regardless of whether computing resources are obtained from the next workstation or from thousands of kilometers away. As such, ultra-high bandwidth, ultra-low latency, massive connections, and multi-service transport are the keys to a high-quality computing network. In this case, how can we build a network that provides high-quality services for computing connections?

Here, we must pay attention to several key features of the computing network.

- Elasticity: The traffic characteristics of the computing network are different from those of the Internet as its requirement for elastic bandwidth is more prominent. For example, in a meteorological computing scenario, the meteorological center needs to perform calculations once or twice a day, and each calculation takes 2 hours. As this requires very high bandwidth, the elastic connection service with adjustable bandwidth and customizable duration is preferred for the meteorological center.

- Agility: The ubiquitous distribution of computing power requires the computing network to have agile access to ubiquitous computing power. When accessing the computing network for computing services, enterprise and individual users do not need to concern themselves about the computing resources and distribution on the network, only about whether they can obtain computing resources in an agile manner.

- Lossless: Computing power is interconnected through the network. Each packet lost on the network or even in the distributed computing process of the cloud data center reduces the computing efficiency. A packet loss rate of just 0.1% is estimated to reduce computing power by 50%. For this reason, lossless transmission within a data center and between data centers is key to a computing network.

- Security: Data, the core element of computing, is a valuable asset. It needs to be securely transmitted to the computing nodes, and the computing result needs to be securely returned. Security is therefore another key to enabling computing networks in various industries, including secure data storage, secure data encryption, secure data isolation between computing tenants, protection against external attacks and data leakage, and secure terminal access.

- Perception: There are many applications (computing power consumers) on the computing network. Providing differentiated SLA assurance for different applications, and providing performance detection and monitoring for important applications, are crucial aspects of a computing network. Perception means that the network must be able to sense both applications and experience. Only in this way is it possible to form the application experience awareness capability of the computing network.

- Visualization: On the computing network, a digital network map with the mapping relationship and layer modeling of the application, computing power, and network needs to be established to effectively reflect the mapping relationship between the digital world and physical world. The digital network map dynamically draws and updates the network overview, implementing clear and visible network topology, transparent tracing of network paths, fault propagation and associated source tracing, and one-click navigation of applications based on the mapping between networks, applications, and computing power on the computing network.

So what technologies are used to match these key characteristics of the network?

IPv6 Enhanced is an IPv6-based network innovation system that draws on innovative technologies such as SRv6, BIERv6, network slicing, DetNet, IFIT, APN6, SFC, and Intelligent and lossless network to build an intelligent IP computing network that fully connects clouds, edges, and devices to continuously transmit computing power to everything.

Let's look at some of these technologies.

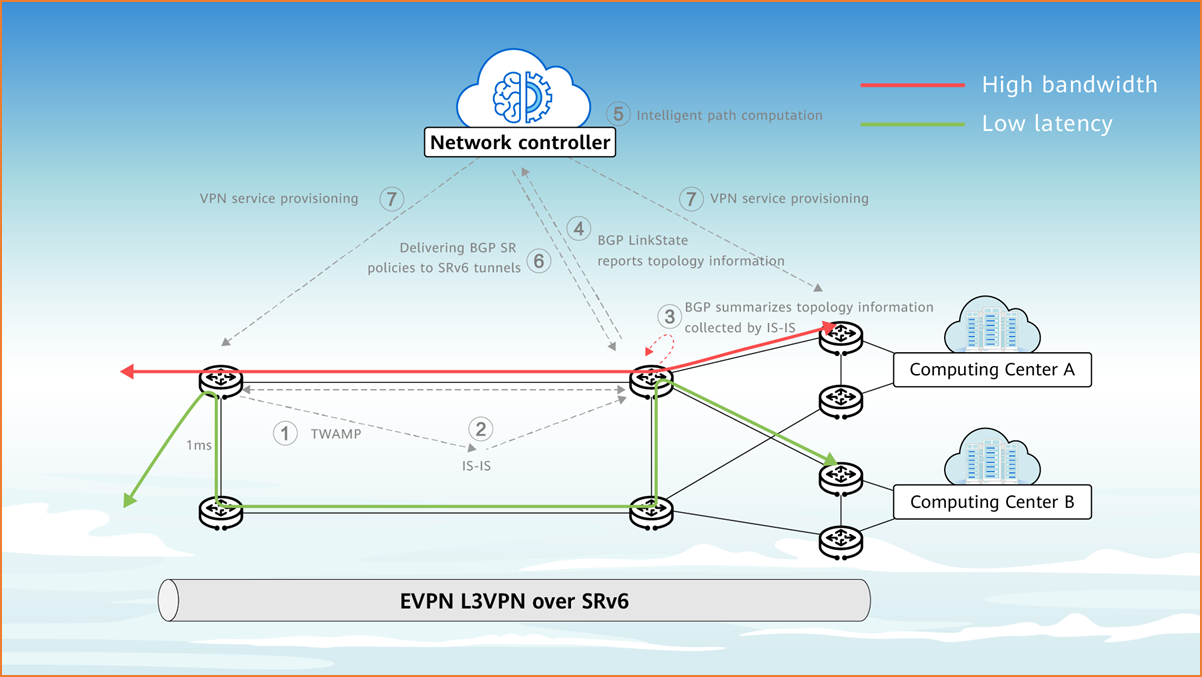

- SRv6 supports ubiquitous access and agile provisioning of computing networks.

Because the computing power needs to provide services for many users, the network needs to meet the requirements of ubiquitous access.

Traditional networks use the MPLS technology, and services are provisioned segment by segment through work order transfer and manual configuration, taking a long time to complete and failing to meet requirements. With SRv6, the computing network can automatically provision services, taking minutes rather than days to complete. SRv6 also replaces the multi-segment networking with E2E networking, implementing ubiquitous access and agile provisioning of massive services with differentiated SLA assurance.

SRv6 supporting ubiquitous access and agile provisioning of computing networks - Network slicing ensures lossless transmission and secure isolation of computing networks.

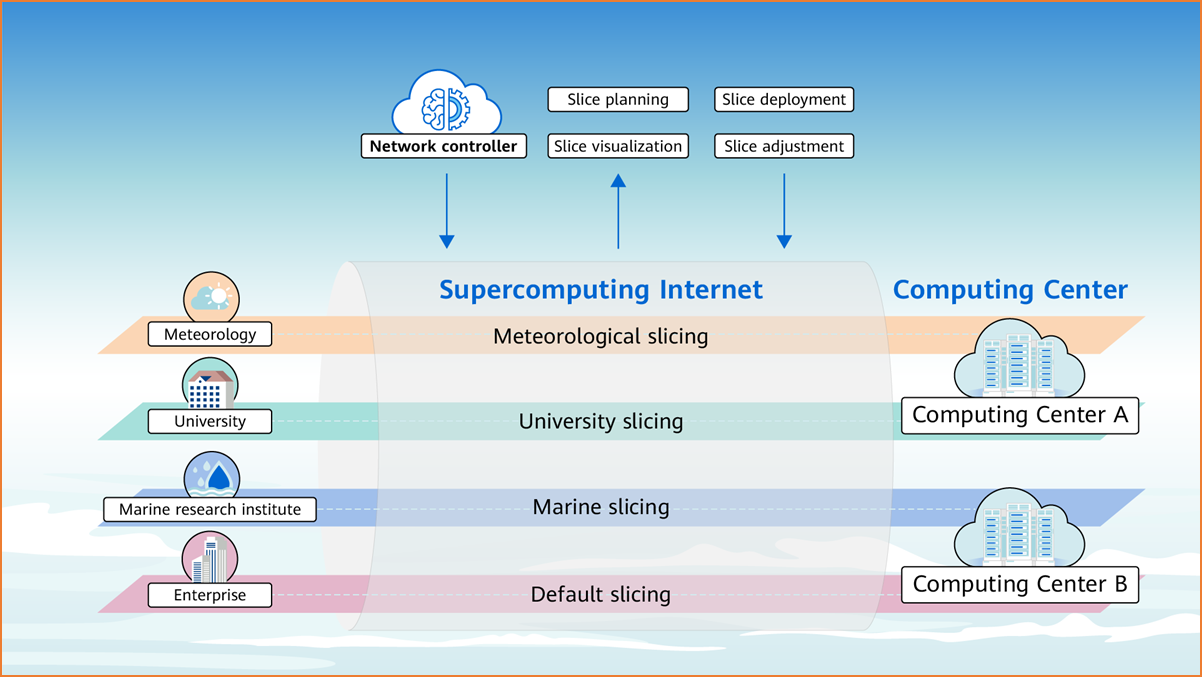

Different services need to be provided to meteorology, universities, marine research institutes, and enterprises on the same computing network based on different requirements for network service quality.

Traditional networks provide differentiated experience for different services based on the private line concept, with VPN private lines working as a soft isolation technology. The computing network accomplishes this based also on the private network concept but with network slicing private network working as a hard isolation technology. On a physical computing network, network slices isolate resources to form multiple virtual networks. Different services are independently transmitted on their own network slices, implementing deterministic lossless transmission and secure isolation.

Slices of the computing network are planned on demand, with all services running on the default slice by default. For services with special requirements, slices can be created based on different SLA requirements. For example, if the meteorological center requires a VPN with a guaranteed bandwidth of 1 Gbit/s, a dedicated network slice meeting such requirements can be created for the meteorological service.

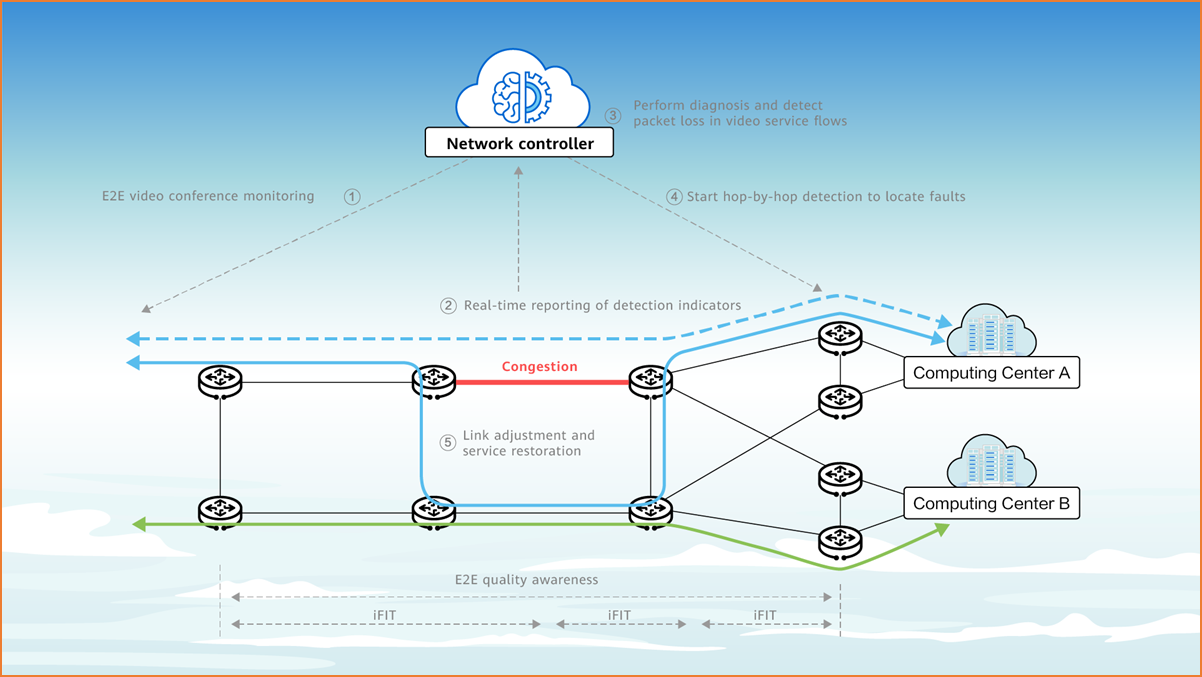

Network slicing ensuring lossless transmission and secure isolation of computing networks - Real-time monitoring and intelligent O&M of computing networks through IFIT.

The number of connections on a computing network is huge. As such, implementing unified monitoring and management of these connections poses new challenges to network O&M capabilities.

Traditional network O&M methods face two major problems: passive perception of service loss and low efficiency in fault demarcation and locating. Service performance deterioration can be detected only after users complain, and network faults cannot be quickly located.

On a computing network, the IFIT technology can completely change this situation.

IFIT inserts specific color bits into real service flows to accurately locate the packet loss location, calculate the hop-by-hop delay and jitter, and even restore paths, implementing real-time network monitoring and intelligent O&M.

Real-time monitoring and intelligent O&M of computing networks through IFIT - Cloud-Network-Security integration builds collaborative security protection for computing networks.

Security is the cornerstone of network stability. However, traditional network border-based protection cannot meet the requirements of computing networks.

Typically, different security devices are deployed at different locations (cloud, network, and device) and security products are not compatible or associated with each other. As a result, the security devices cannot adapt to path changes after services are migrated to the cloud, resulting in ineffective protection and low efficiency.

The cloud-network-security integrated architecture can be used to build a trustworthy network to implement terminal security, network access security, network security, cloud access security, and cloud (platform, application, and data) security.

- Qiankun Cloud (security brain) and Tianguan (security boundary) are deployed to provide security services such as boundary protection, threat analysis, and regular network protection.

- The security resource pool and security service chain are deployed to provide 24-hour intelligent analysis, online security expert services, tenant-level security cloud services, and integrated scheduling of security computing power and networks based on SRv6+SFC orchestration.

- The adaptive quantum encryption solution is used to upgrade the traditional Internet IPsec encryption mechanism and provide security assurance for multi-point distribution, flexible networking, quantum-level keys, and native encryption.

- The traditional access system (adopting one-time verification for permanent trust) is replaced by the zero-trust solution, identity security cornerstone building, continuous verification, dynamic authorization, and global defense.

Building A Large National Computing Network Through the East-to-West Computing Resource Transfer Project

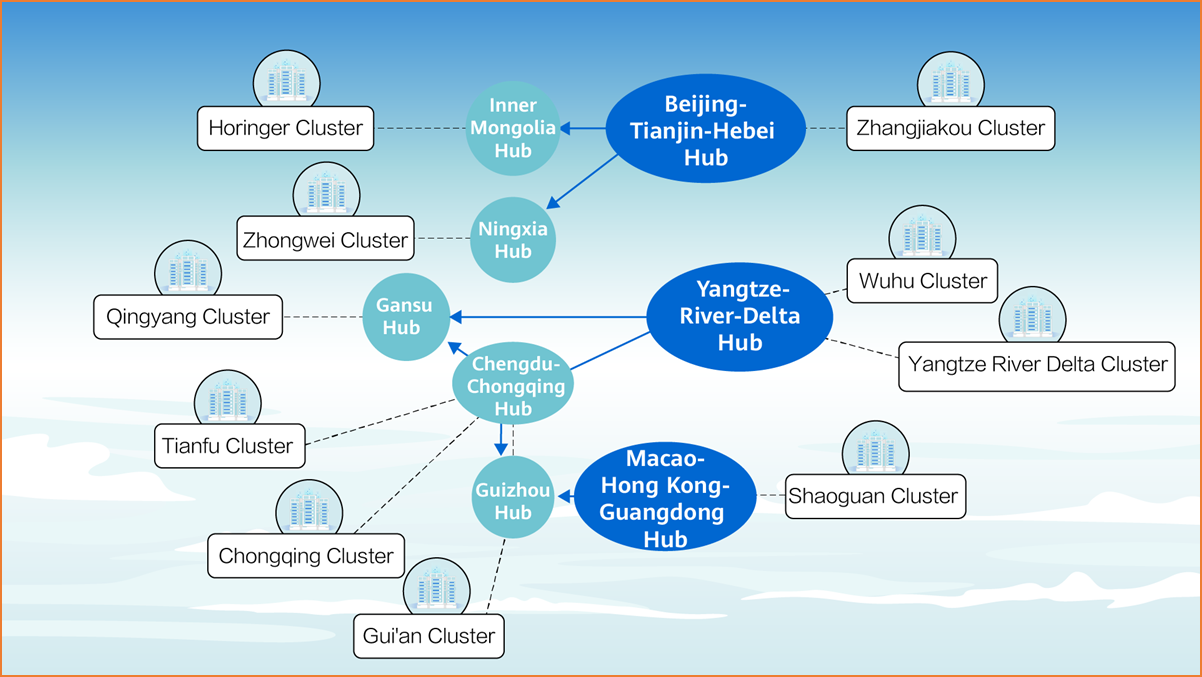

In May 2021, China proposed the East-to-West Computing Resource Transfer Project. The aim of the project is to build a new computing network system that integrates data centers, cloud computing, and big data to meet the computing power requirements of eastern regions by utilizing western resources. Optimizing data center construction layout will help promote collaboration between the east and the west.

In February 2022, China's National Development and Reform Commission, Office of the Central Cyberspace Affairs Commission, Ministry of Industry and Information Technology, and National Energy Administration jointly issued a notice to start the construction of national computing hub nodes in eight cities and outlined plans to establish 10 national data center clusters.

East-to-West Computing Resource Transfer Project

At present, the overall layout design of the national integrated big data center system has been completed, and the East-to-West Computing Resource Transfer Project has officially started.

So why is the project needed?

As mentioned earlier, computing power and computing networks are of great importance:

- Computing power is the power of production. In the digital economy era, computing power has become the core engine that drives national economic growth. To improve a country's computing power is to stimulate its economic development. The higher the computing power index, the more evident the economic growth.

- A computing network connects central computing nodes distributed at different geographical locations through new network technologies. The purpose is to perceive the status of computing resources in real time, coordinate the allocation and scheduling of computing tasks, and transmit data to form a global network that senses, allocates, and schedules computing resources. On the network, computing power, data, and applications can be aggregated and shared.

Why is the East-to-West Computing Resource Transfer Project necessary in this scenario? The following three key factors for data center construction and operation are considered:

- Land: A data center involves many IT hardware devices. And as it requires the deployment of systems such as power supply, security control, and heat dissipation, the data center will occupy an increasingly larger area. According to public data, a single data center is roughly the size of 60 football fields. Such a large footprint is impractical in first- and second-tier cities in eastern China where land is extremely expensive. In contrast, fourth- and fifth-tier cities in central and western China have put aside large amounts of land for low-cost construction of data centers.

- Electricity: The data center industry is known for being energy intensive. According to public data, about 56.7% of a data center's operation costs are electricity fees. Every year, data centers account for more and more of the total power consumption in China, with this proportion expected to exceed 4% by 2025. Compared with the eastern region, the central and western regions have abundant clean energy such as wind power, PV, and hydropower. However, the industrial power consumption demand in the central and western regions is far less than that in eastern cities, helping to drive down the cost of power.

- Climate: A data center not only consumes a significant amount of energy, but also produces a lot of heat during operation. If this heat is not dissipated quickly through cooling systems, hardware devices may break down. According to public data, 40% of a data center's energy consumption is due to cooling. The central and western regions of China are more suitable for building data centers because they have lower temperatures. For example, Guizhou offers the best development potential of the data center industry in China as its annual average temperature is 14°C to 16°C.

The East-to-West Computing Resource Transfer Project is also changing the distribution of national computing power. In this sense, the importance of computing power in the interconnection and efficient scheduling of scattered computing power is identified.

High computing power requirements are concentrated in eastern cities whereas the sites chosen for data center construction are located far away. This results in higher data transmission delays. Therefore, the eight major data hubs in China contain three hubs in the eastern region: Beijing-Tianjin-Hebei, Yangtze River Delta, and Guangdong-Hong Kong-Macao Greater Bay Area.

- Computational tasks with high latency requirements, such as self-driving and telemedicine that require an E2E transmission latency of less than 10 ms, are still retained on these data hubs.

- For most non-real-time or offline computing requirements (e.g., cloud disk data access that can tolerate a transmission latency higher than 30 ms), the East-to-West Computing Resource Transfer Project can greatly alleviate the current supply-demand imbalance of computing power between the east and west, implementing national coordination of computing power.

The East-to-West Computing Resource Transfer Project has built a national computing network, namely a national supercomputer, and made computing power an on-demand public service.

In the future, national and even global computing networks will help us enter the intelligent world and usher in a new era that will have the same historical importance as the navigation, industrial revolution, and aerospace eras. This marks another step forward in the great course of human history.

- Author: Wu Zhuoran

- Updated on: 2023-04-08

- Views: 6411

- Average rating: